Unrolling the Scroll: Probing the Security of a Zero-Knowledge Roll-Up

Introduction

A few months back, our group stumbled upon an early bug bounty program hosted by Scroll, an up-and-coming zero-knowledge roll-up. They were offering big money - $5,000 each - for finding buggy transactions in their testnet.

As skilled hackers always on the lookout for new frontiers, the pre-launch bug bounty from Scroll immediately piqued our interest. The announcement landed squarely on our radar for hunting down vulnerabilities!

We went into hacking Scroll with zero knowledge about their clever zero-knowledge roll-up setup. By fuzzing their code and tweaking things, we found something interesting. It gave us a glimpse into how seriously they take security.

In this blog post, we will not only narrate our exciting hacking journey but also share our observations and insights about the security measures employed by Scroll.

Scroll: A Security-focused Scaling Solution for Ethereum

Scroll is a zkRollup Layer 2 dedicated to enhancing Ethereum scalability through a bytecode-equivalent zkEVM circuit. The mainnet of Scroll was launched last month.

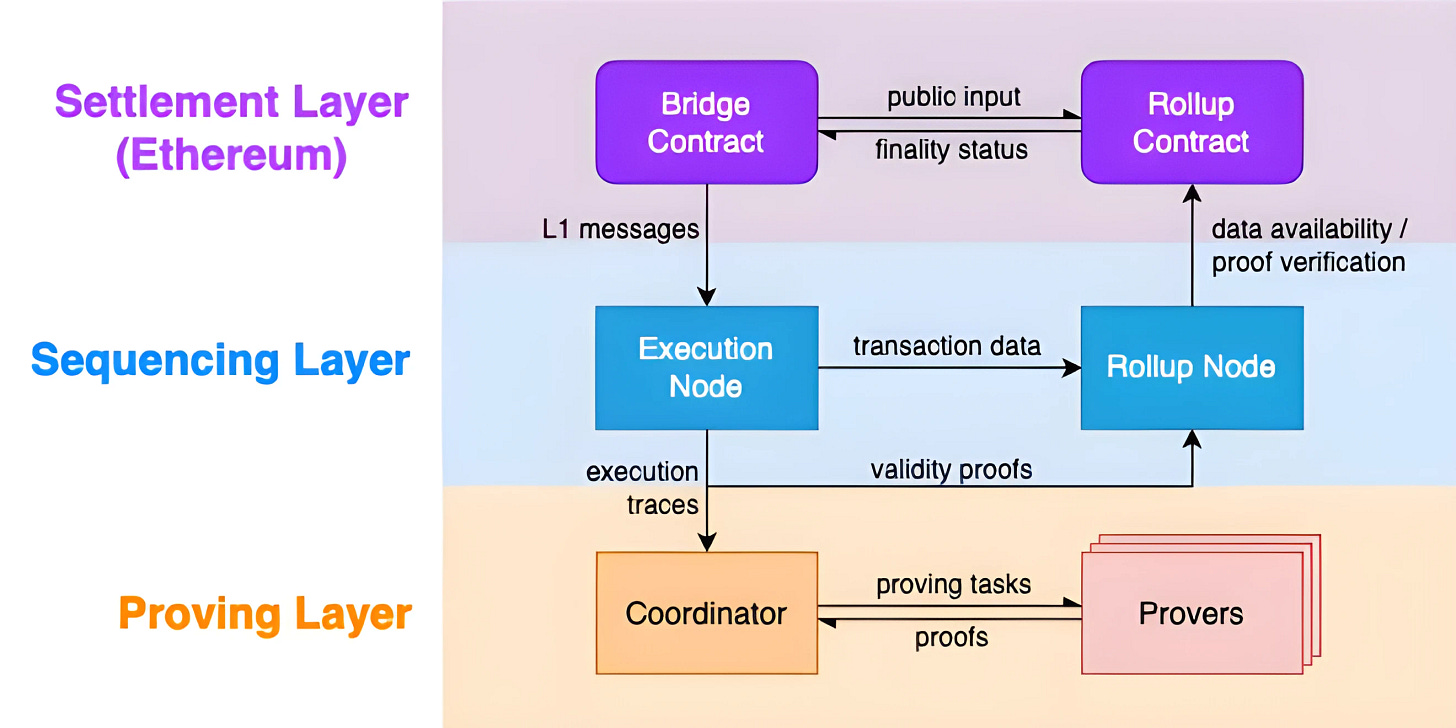

Scroll is composed of three layers: settlement layer, sequencing layer and proving layer.

The settlement layer refers to smart contracts deployed on the Ethereum network, where the roll-up submits and verifies the validity of computations conducted in the nested layer. The sequencing layer forms the major user-facing component as an isolated yet fully EVM-compatible chain.

Together, these two layers make up the typical architecture found in alternative layer 2 public blockchain solutions, with the settlement layer confirming operations from the standalone sequencing environment.

The proving layer differentiates zero-knowledge rollups from traditional implementations, representing an additional component. The most captivating advantage conferred by zk techniques is the rollup's ability to give a succinct proof of the entire layer 2 chain state without broadcasting individual transactions to Ethereum, thus ensuring time and space efficiency.

However, generating these proofs demands non-trivial computational overhead beyond the capabilities of regular execution nodes. So, Scroll set up a layer just for intensive computation work. The proving tasks are handled by super powerful nodes or distribution across a group of such nodes. The specialized proving layer focuses only on that, keeping layer 2 running without proofing's steep resource costs slowing it down.

The Rolling-up Scroll: Execution, Commitment and Finalization

The rollup process can be broken down into three phases:

Transaction Execution

Batching and Data Commitment

Proof Generation and Finalization

The first two layers function asynchronously yet cohesively irrespective of the additional computation in the proving layer. However, unavoidable lags from proving labor could potentially induce inconsistency between the two layers.

Consider this scenario: A valid transaction is confirmed by the sequencing layer (L2Geth), and the settlement layer records the commitment with its batch details. However, the proving layer cannot craft a proof for it! Only after extensive GPU hours does the prover realize they are unable to generate a valid witness, or the proof does not match what is needed.

It is important to note that this concept differs from chain reorg issues seen in other alternative chains. Typically, reorg problems in those systems are caused by networking difficulties among interconnected nodes. The current version of Scroll is running in a controlled (centralized) manner. Reorgs in other networks would happen and resolve silently by design. However, the proving failures may not be straightforward for Scroll.

If a transaction is unprovable, it stops the full batch from finalizing. This blocks the whole network. Now you could revert any transactions after the toxic one and remove it and related transactions, like a hard fork. Or you could manually add fake proofs just for these cases. But that second option would go against the purpose of the roll-up system by adding unproven transactions.

It is a tricky situation that requires carefully considering options like forks or alternate proofs to resolve inconsistencies without compromising integrity. Handling troublesome transactions in a safe way is exactly what the Scroll team is worried about. That is why they set up a bug bounty program for this specific problem!

Fuzzing the Bus Mapping: Unleashing Chaos in Search of Vulnerabilities

There are some potential reasons that could cause prover bugs, as listed on the bug bounty page:

Missing information in the generated trace.

Errors when the bus-mapping module processes traces from L2Geth.

Divergent behavior between L2Geth and the zkEVM circuit.

Bypassing the circuit capacity check.

These bugs could appear in various parts of the Scroll project workflow:

Transactions are validated and executed by L2Geth, which generates execution traces.

The bus-mapping module converts traces to a format for the zkEVM circuit.

The witness and zkEVM circuit generate the proof, requiring a match with L2Geth behavior.

A capacity checker is needed because zkEVM circuits have finite capability.

The trace generator and circuit capacity checker are implemented in L2Geth. We manually reviewed the Golang code added by the Scroll team and the code seems well written.

As we did not have the budget to set up a powerful prover node capable of generating actual proofs, we decided to leave the bug hunting in zkEVM circuits as a challenge for moneyed hackers. (Conducting such proofs could require computational resources more expensive than the bounty rewards.)

This effectively ruled out targeting the circuits themselves. The only component remaining within our scope to audit was the bus-mapping module, as evaluating its code would not demand generating a full proof.

The bus mapping module is responsible for parsing transaction traces executed in L2Geth and converting them into the witness required to generate proofs.

In reviewing the bus mapping source code, we discovered that the project has comprehensive unit tests. With only minor modifications, we were able to construct a basic harness to execute the tests.

use libfuzzer_sys::fuzz_target;

...

fuzz_target!(|data: &[u8]| {

let code = Bytecode::from_raw_unchecked(data.into());

// Get the execution steps from the external tracer

let block: GethData = TestContext::<2, 1>::new(

None,

account_0_code_account_1_no_code(code),

tx_from_1_to_0,

|block, _tx| block.number(0xcafeu64),

)

.unwrap()

.into();

let mut builder = BlockData::new_from_geth_data(block.clone()).new_circuit_input_builder();

builder

.handle_block(&block.eth_block, &block.geth_traces);

});

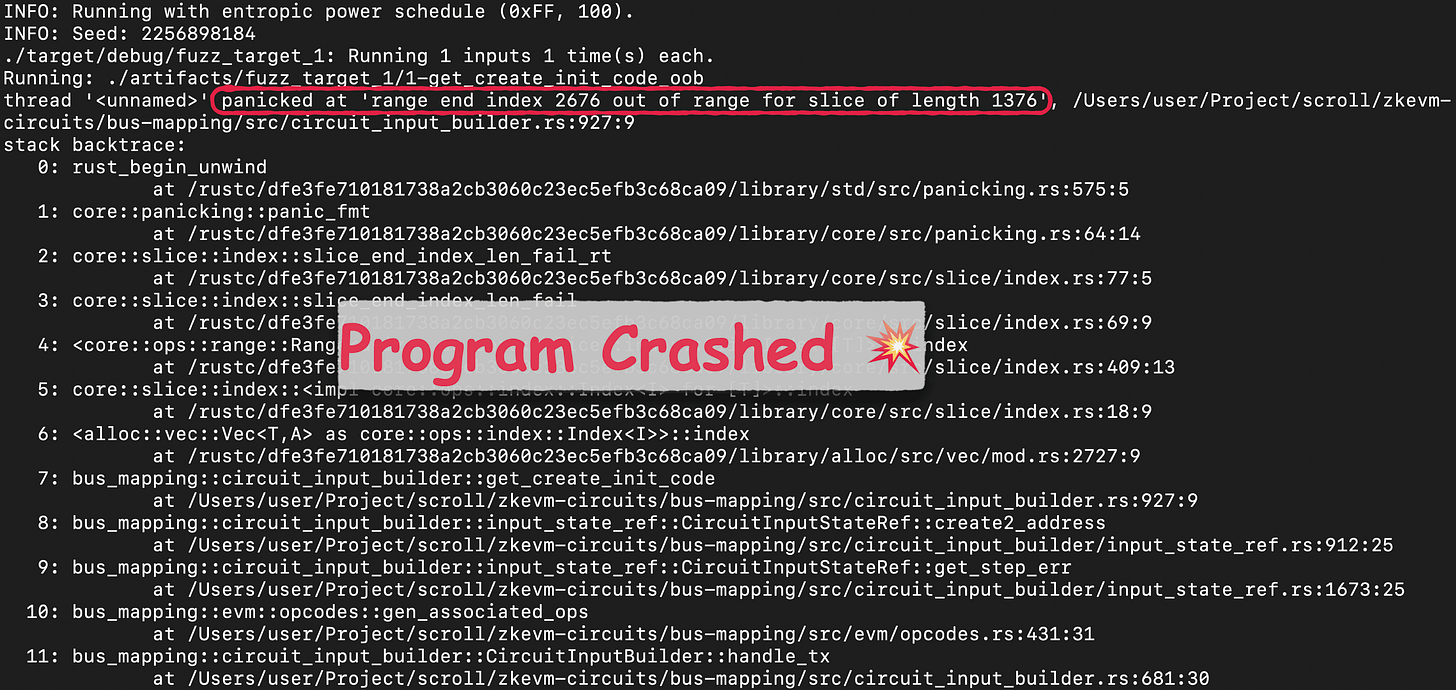

Within five minutes, we were able to trigger an interesting crash using cargo-fuzz. It generated corpus input with considerable complexity, yet the underlying crash seemed straightforward.

The Discovery: Unveiling a Subtle Bug in Scroll

Specifically, the crash occurred within the get_create_init_code function when the offset_end variable exceeded memory boundaries during an access, resulting in a segmentation fault. Despite the complexity of the randomly generated fuzzing corpus, the crash proved easily reproducible and localized to a single out-of-bounds memory access.

pub fn get_create_init_code<'a>(

call_ctx: &'a CallContext,

step: &GethExecStep,

) -> Result<&'a [u8], Error> {

let offset = step.stack.nth_last(1)?.low_u64() as usize;

let length = step.stack.nth_last(2)?.as_usize();

let mem_len = call_ctx.memory.0.len();

if offset >= mem_len {

return Ok(&[]);

}

let offset_end = offset.checked_add(length).unwrap_or(mem_len);

Ok(&call_ctx.memory.0[offset..offset_end])

}

The vulnerable function can be triggered when calling the create2_address function.

pub(crate) fn create2_address(&self, step: &GethExecStep) -> Result<Address, Error> {

let salt = step.stack.nth_last(3)?;

let call_ctx = self.call_ctx()?;

let init_code = get_create_init_code(call_ctx, step)?.to_vec();

let address = get_create2_address(self.call()?.address, salt.to_be_bytes(), init_code);

log::trace!(

"create2_address {:?}, from {:?}, salt {:?}",

address,

self.call()?.address,

salt

);

Ok(address)

}

The CREATE2 opcode enables deterministic prediction of contract deployment addresses without actual deployment. The underlying idea is to make addresses independent of future blockchain events - a contract can always be deployed at its precomputed address regardless of what happens.

New contract addresses are a function of:

0xFF, a constant that prevents collisions with regular CREATE

The sending account address

A salt value provided by the sender

The bytecode of the contract to be deployed

The address is calculated as:

new_address = hash(0xFF, sender, salt, bytecode)

The calculation of the new address triggered the memory access violation bug by fetching the bytecode from an unallocated memory region.

We created a basic Proof of Concept (PoC) by invoking the CREATE2 instruction with specific parameters that were expected to exceed memory boundaries: value=0, offset=0, length=100, salt=0. Surprisingly, this did not result in a crash as expected.

Analyzing the crashing corpus revealed that the CREATE2 instruction had been executed twice. This raised concerns about potential issues in the logic handling conflict resolution when multiple create2 operations share the same salt.

Simply put, EVM does not allow multiple contracts to be created at the same address. The second creation will fail due to a contract address collision error. When parsing traces from L2Geth, the bus mapping module aims to analyze and understand the underlying causes of any encountered errors. If a CREATE2 instruction fails execution, it computes the cryptographic hash of the associated code to derive and track the duplicated contract address.

if matches!(step.op, OpcodeId::CREATE | OpcodeId::CREATE2) {

let (address, contract_addr_collision_err) = match step.op {

OpcodeId::CREATE => (

self.create_address()?,

ContractAddressCollisionError::Create,

),

OpcodeId::CREATE2 => (

self.create2_address(step)?,

ContractAddressCollisionError::Create2,

),

_ => unreachable!(),

};

let (found, _) = self.sdb.get_account(&address);

if found {

log::debug!(

"create address collision at {:?}, step {:?}, next_step {:?}",

address,

step,

next_step

);

return Ok(Some(ExecError::ContractAddressCollision(

contract_addr_collision_err,

)));

}

}

Nothing goes wrong until memory expansion occurs. If an instruction dereferences a location beyond the current memory boundary, memory is automatically expanded and initialized with zeroes. This is handled correctly by L2Geth and bus mapping for the execution of every opcode.

However, memory expansion does not take place inside the error handler for failed executions. This is why the subtle bug can only be triggered during a contract address collision, when the failed create2 invocation does not cause an expansion of memory space before the address calculation dereferences outside the initialized region.

The Circuit Capacity Checker: An Additional Layer of Defense

We tried to launch the attack script against our local test node, but still nothing happened. We thought we were close to claiming the bug bounty, however, the Scroll team anticipated this one step ahead of us. It was the circuit capacity checker (CCC) that blocked the potential hacks.

The CCC, as you may remember, is designed to calculate the required circuit gates for a specific transaction and reject those considered too costly. The L2Geth node invokes CCC to simulate incoming transactions. Any bugs within the bus mapping module would also affect CCC. If CCC were to crash, the L2Geth node would simply drop the troublesome transaction rather than exposing a vulnerability.

The CCC provides extra protection as a firewall. It evaluates transaction costs before execution. This helps prevent unknown attacks from overloading resources or exploiting memory bugs through crashing. This auxiliary defense strengthens the overall security of the platform.

Conclusion

We reported our findings to the Scroll team, who then quickly deployed a fix for the memory corruption bug just before the launch of their mainnet. Our speed hacking attempts with Scroll did not yield a bounty. But we are glad we understood their rollup's robust security. The Scroll team clearly did great work fortifying it. Props to them!

While this hack did not pay off, we are excited by how much we learned dissecting their defenses. It inspired us to keep challenging ourselves with zk-rollups and web3. The possibilities are endless - our learning has just begun!

About Offside Labs

Offside Labs is a professional team specializing in security research and vulnerability detection. Our primary focus includes rigorous bug hunting, comprehensive audits, and expert security consultation. We have extensive experience in the web3 environment and strive to enhance the security posture of our client's projects in this rapidly evolving digital space.